Introduction

We store a significant amount of sensitive data online, such as personally identifying information (PII), trade secrets, family pictures, and customer information. The data that we store is often not protected in an appropriate manner.

Legislation, such as the General Data Protection Regulation (GDPR), incentivizes service providers to better preserve individuals' privacy, primarily through making the providers liable in the event of a data breach. This liability pressure has revealed a technological gap, whereby providers are often not equipped with technology that can suitably protect their customers. Encrypted Data Vaults fill this gap and provide a variety of other benefits.

This specification describes a privacy-respecting mechanism for storing, indexing, and retrieving encrypted data at a storage provider. It is often useful when an individual or organization wants to protect data in a way that the storage provider cannot view, analyze, aggregate, or resell the data. This approach also ensures that application data is portable and protected from storage provider data breaches.

Why Do We Need Encrypted Data Vaults?

Explain why individuals and organizations that want to protect their privacy, trade secrets, and ensure data portability will benefit from using this technology. Explain how giving a standard API for the storage of user data empowering users to "bring their own storage", giving them control of their own information. Explain how applications that are written against a standard API and assume that users will bring their own storage can separate concerns and focus on the functionality of their application, removing the need to deal with storage infrastructure (instead leaving it to a specialist service provider that is chosen by the user).

Requiring client-side (edge) encryption for all data and metadata at the same time as enabling the user to store data on multiple devices and to share data with others, whilst also having searchable or queryable data, has been historically very difficult to implement in one system. Trade-offs are often made which sacrifice privacy in favor of usability, or vice versa.

Due to a number of maturing technologies and standards, we are hopeful that such trade-offs are no longer necessary, and that it is possible to design a privacy-preserving protocol for encrypted decentralized data storage that has broad practical appeal.

Ecosystem Overview

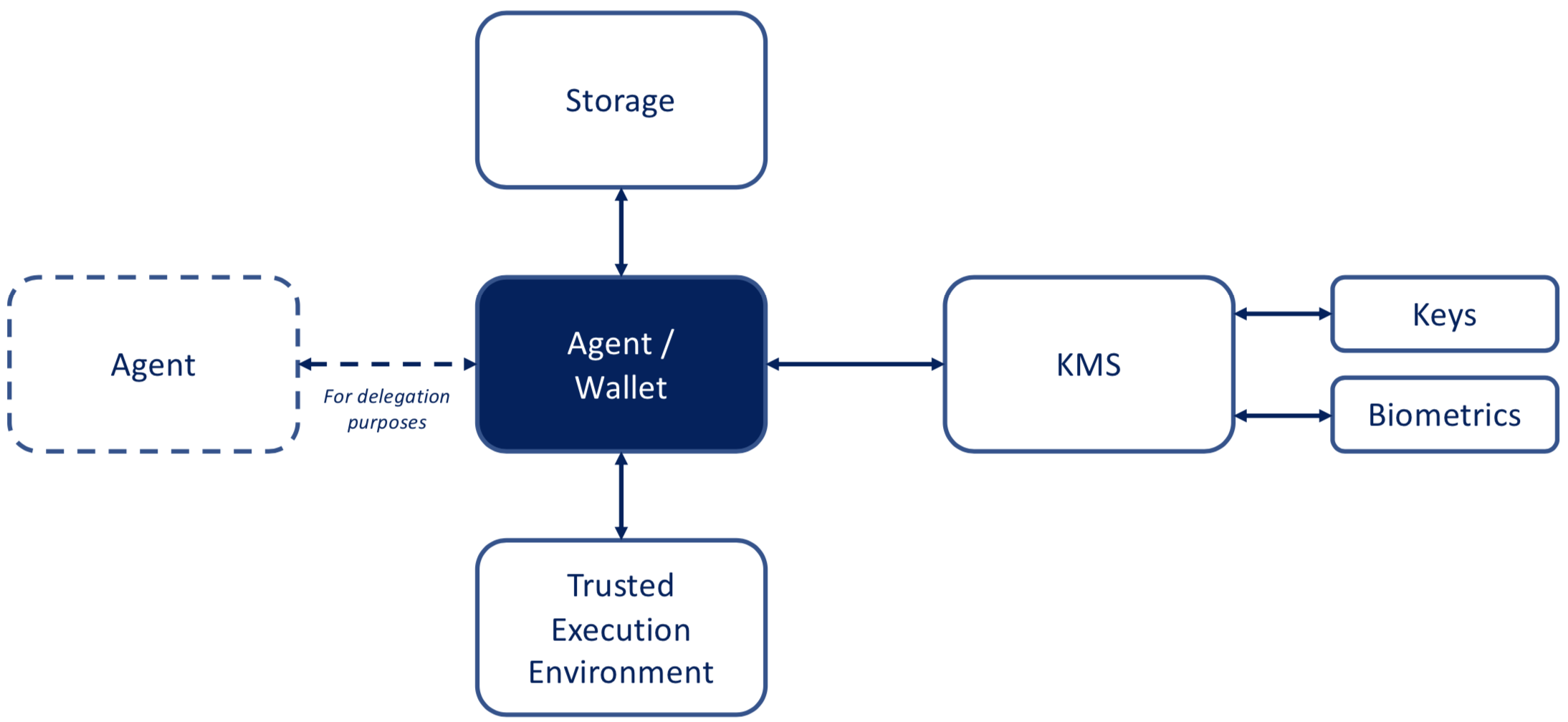

The problem of decentralized data storage has been approached from various different angles, and personal data stores (PDS), decentralized or otherwise, have a long history in commercial and academic settings. Different approaches have resulted in variations in terminology and architectures. The diagram below shows the types of components that are emerging, and the roles they play. Encrypted Data Vaults fulfill a storage role.

This section describes the roles of the core actors and the relationships between them in an ecosystem where this specification is expected to be useful. A role is an abstraction that might be implemented in many different ways. The separation of roles suggests likely interfaces and protocols for standardization. The following roles are introduced in this specification:

- data vault controller

- A role an entity might perform by creating, managing, and deleting data vaults. This entity is also responsible for granting and revoking authorization to storage agents to the data vaults that are under its control.

- storage agent

- A role an entity might perform by creating, updating, and deleting data in a data vault. This entity is typically granted authorization to to access a data vault by a data vault controller.

- storage provider

- A role an entity might perform by providing a raw data storage mechanism to a data vault controller. It is impossible for this entity to see the data that it is storing due to all data being encrypted at rest and in transit to and from the storage provider.

Use Cases

These should be in a USE-CASES document.

The following four use cases have been identified as representative of common usage patterns (though are by no means the only ones).

Store and use data

I want to store my data in a safe location. I don't want the storage provider to be able to see any data I store. This means that only I can see and use the data.

Search data

Over time, I will store a large amount of data. I want to search the data, but don't want the service provider to know what I'm storing or searching for.

Share data with one or more entities

I want to share my data with other people and services. I can decide on giving other entities access to data in my storage area when I save the data for the first time or in a later stage. The storage should only give access to others when I have explicitly given consent for each item.

I want to be able to revoke the access of others at any time. When sharing data, I can include an expiration date for the access to my data by a third-party.

Store the same data in more than one place

I want to backup my data across multiple storage locations in case one fails. These locations can be hosted by different storage providers and can be accessible over different protocols. One location could be local on my phone, while another might be cloud-based. The locations should be able to synchronize between each other so data is up to date in both places regardless of how I create or update data, and this should happen automatically and without my help as much as possible.

Deployment topologies

Based on the use cases, we consider the following deployment topologies:

- Mobile Device Only: The server and the client reside on the same device. The vault is a library providing functionality via a binary API, using local storage to provide an encrypted database.

- Mobile Device Plus Cloud Storage: A mobile device plays the role of a client, and the server is a remote cloud-based service provider that has exposed the storage via a network-based API (eg. REST over HTTPS). Data is not stored on the mobile device.

- Multiple Devices (Single User) Plus Cloud Storage: When adding more devices managed by a single user, the vault can be used to synchronize data across devices.

- Multiple Devices (Multiple Users) Plus Cloud Storage: When pairing multiple users with cloud storage, the vault can be used to synchronize data between multiple users with the help of replication and merge strategies.

Requirements

The following sections elaborate on the requirements that have been gathered from the core use cases.

Privacy and multi-party encryption

One of the main goals of this system is ensuring the privacy of an entity's data so that it cannot be accessed by unauthorized parties, including the storage provider.

To accomplish this, the data must be encrypted both while it is in transit (being sent over a network) and while it is at rest (on a storage system).

Since data could be shared with more than one entity, it is also necessary for the encryption mechanism to support encrypting data to multiple parties.

Sharing and authorization

It is necessary to have a mechanism that enables authorized sharing of encrypted information among one or more entities.

The system is expected to specify one mandatory authorization scheme, but also allow other alternate authorization schemes. Examples of authorization schemes include OAuth2, Web Access Control, and [[ZCAP]]s (Authorization Capabilities).

Identifiers

The system should be identifier agnostic. In general, identifiers that are a form of URN or URL are preferred. While it is presumed that [[DID-CORE]] (Decentralized Identifiers, DIDs) will be used by the system in a few important ways, hard-coding the implementations to DIDs would be an anti-pattern.

Versioning and replication

It is expected that information can be backed up on a continuous basis. For this reason, it is necessary for the system to support at least one mandatory versioning strategy and one mandatory replication strategy, but also allow other alternate versioning and replication strategies.

Metadata and searching

Large volumes of data are expected to be stored using this system, which then need to be efficiently and selectively retrieved. To that end, an encrypted search mechanism is a necessary feature of the system.

It is important for clients to be able to associate metadata with the data such that it can be searched. At the same time, since privacy of both data and metadata is a key requirement, the metadata must be stored in an encrypted state, and service providers must be able to perform those searches in an opaque and privacy-preserving way, without being able to see the metadata.

Protocols

Since this system can reside in a variety of operating environments, it is important that at least one protocol is mandatory, but that other protocols are also allowed by the design. Examples of protocols include HTTP, gRPC, Bluetooth, and various binary on-the-wire protocols. An HTTPS API is defined in .

Design goals

This section elaborates upon a number of guiding principles and design goals that shape Encrypted Data Vaults.

Layered and modular architecture

A layered architectural approach is used to ensure that the foundation for the system is easy to implement while allowing more complex functionality to be layered on top of the lower foundations.

For example, Layer 1 might contain the mandatory features for the most basic system, Layer 2 might contain useful features for most deployments, Layer 3 might contain advanced features needed by a small subset of the ecosystem, and Layer 4 might contain extremely complex features that are needed by a very small subset of the ecosystem.

Prioritize privacy

This system is intended to protect an entity's privacy. When exploring new features, always ask "How would this impact privacy?". New features that negatively impact privacy are expected to undergo extreme scrutiny to determine if the trade-offs are worth the new functionality.

Push implementation complexity to the client

Servers in this system are expected to provide functionality strongly focused on the storage and retrieval of encrypted data. The more a server knows, the greater the risk to the privacy of the entity storing the data, and the more liability the service provider might have for hosting data. In addition, pushing complexity to the client enables service providers to provide stable server-side implementations while innovation can by carried out by clients.

TBD